The convolution Engine

In this article, we design a fully parameterized 2D convolution engine in Verilog HDL and verify its functionality with a golden model written in python.

Now you can access an FPGA LAB environment on demand with chiprentals. This service is currently in the Beta stage and is being provided for free. Go perform your projects in a real world environment. Book your slot here. The PYNQ-Z2 board being offered currently is the best choice for trying out ML and AI acceleration projects like this one.

Part One - The Architecture Outline

Part Two - The Convolution Engine

Part Three - The Activation Function

Part Five - Adding fixed-point arithmetic to our design

Part Six - Putting it all together: The CNN Accelerator

Part Seven - System integration of the Convolution IP

Part Eight - Creating a multi-layer neural network in hardware.

-

From here on we shall refer to a convolution layer as a

convlayer. -

Our design implements a fully parameterized convolution operation in Verilog. By 'fully parameterized', I mean to say that the same code can be used to synthesize a

convlayer of any size the architecture demands(conv3x3,conv7x7,conv11x11). You just have to vary the parameters passed to this code before the synthesis. -

The primary idea behind the approach to this design is to build a highly pipelined streaming architecture wherein the processing module does not have to stop at any point in time. i.e any part of the convolver does not wait for any other part to finish its work and supply it with the result. Each stage is performing a small portion of the entire work continuously on a different part of the input every clock cycle. This is not just a feature of this design, it is a general principle called 'pipelining' that is widely used to break down large computational processes into smaller steps and also increase the highest frequency that the entire circuit can operate at.

-

Our design uses the MAC (Multiply and Accumulate) units with the aim of mapping these operations to the DSP blocks of the FPGA. Achieving that would make the multiplication and addition operations much faster and consume less power since the DSP blocks are implemented in hard macros. which is to say, the DSP blocks have already been synthesized, placed, and routed into silicon on the FPGA device in the most efficient way possible, unlike general IP blocks wherein only the tried and tested RTL code is supplied to you and can be synthesized at your will.

NOTE: This design unit was implemented on an FPGA as a part of a complete Processor + FPGA system to measure the actual acceleration it provides. You can read about it in Part Seven - System integration of the Convolution IP.

-

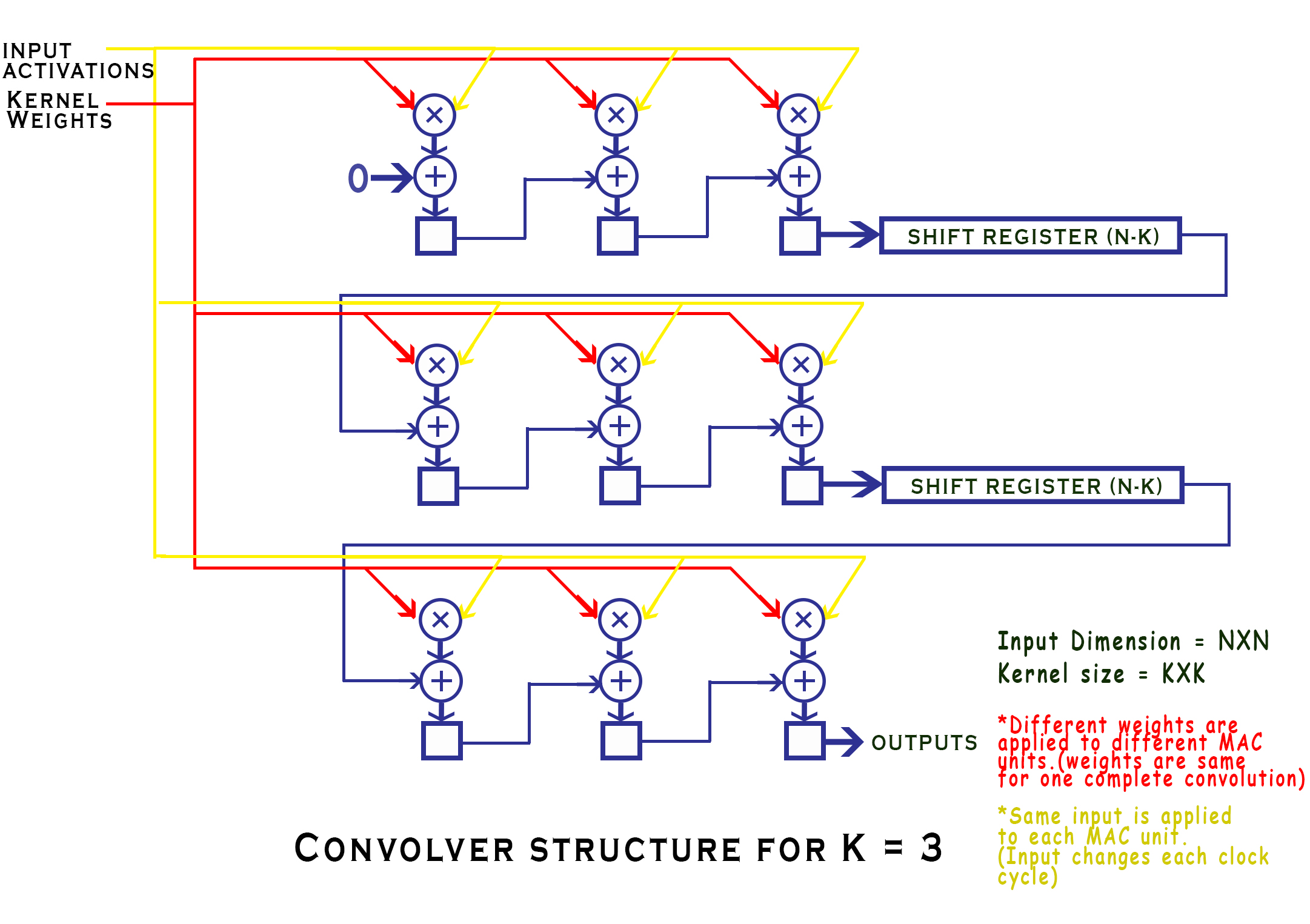

The convolver is nothing but a set of MAC units and a few shift registers, which when supplied with the right inputs, output the result of the convolution after a fixed number of clock cycles. Let's Dig into it!

-

Let us assume the size of the input Feature map (image) to be of a dimension

NxNand our kernel (filter/window) to be of the dimensionsKxK. It is understood obviously, thatK < N. -

srepresents the value of the stride with which the window moves over the feature map.

Now, if you know how the 2-D convolution works, the size of the output feature map will be smaller than that of the input feature map. Precisely, if we do not have some form of zero paddings on the input feature map, the output will be of the size

(N-K+1)/s x (N-K+1)/s

As you can see in the image, our convolver has MAC units laid out in the size of the convolution kernel and a couple of shift registers connected at various levels in the pipeline. The thing to note about the inputs to these various MAC units is that.

- The kernel weights are placed such that each MAC unit has a fixed value of the weight throughout the process of the convolution, but each mac unit has a different value placed on it according to its position.

- Whereas, for the input activation values, the same value is placed on each and every one of the MAC units except that value changes with every clock cycle according to the input feature map.

Additionally, we have a few more signals like the valid_conv and end_conv signal which tell the outside world if the outputs of this convolver module are valid or not. But why would the convolver produce invalid results in the first place? After the window is done moving over a particular row of the input, it continues to wrap around to the next row creating invalid outputs. We could avoid calculation during the wrap-around period, but that would require us to stop the pipeline, which is against our streaming design principle. So instead we just discard the outputs that we believe are invalid with the help of a valid conv signal. Look at the following animation to understand better.

This animation shows how the various inputs to the Convolver are being modified with each clock cycle and also tracks the value of a particular variable as it makes its way to the output, this shows you the mathematical reasoning behind the design and how the convolution is actually being calculated.

If you observe the final value of x which is

w0*a0 + w1*a1 + w2*a2 + w3*a4 + w4*a5 + w5*a6 + w6*a8 + w7*a9 + w8*a10

The code snippet pertaining to the convolution unit has been shown below. It is fairly well commented and uses the Verilog generate loop to simplify the code for all those MAC units, also, the code is fully parameterized, meaning you can scale it to any size of N and K you wish depending on which neural network you are building.

ADVANTAGES OF THIS ARCHITECTURE:

- The input feature map has to be sent in only once (i.e the stored activations have to be accessed from the memory only once) thus greatly reducing the memory access time and the storage requirement. The shift registers create a kind of local caching for the previously accessed values.

- The convolution for any sized input can be calculated with this architecture without having to break the input or temporarily store it elsewhere due to low compute capability

- The stride value can also be changed for some exotic architectures where a larger stride is required.

Now let's dig into:

The Code

Before we start writing Verilog for our design, we need to have a golden model. i.e. a basic model in code that is able to achieve the functionality we are targeting to create in hardware. You can code it in which ever language you like, but I choose python because I love python.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Running the above code gives us:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 | |

So our goal would be to design a Verilog module that achieves the same set of outputs for any given inputs to the above python function.

NOTE on utilizing DSP blocks for MAC units - when the synthesis tools encounter a multiply and accumulate operation, they usually implement that code in a

DSP block on the FPGA instead of using regular slice logic. However in some cases like high resource utilization scenarios the tools do not have enough dsp

blocks and implement multipliers and adders in regular slice logic instead. To be sure, we could use the (* use_dsp = "yes" *) attribute in xilinx or a

similar attribute in other tools (extract_mac for Altera tools) to force them to use the relevant DSP resources on the FPGA for that particular module.However, it is not necessary in

most cases.

This document from Xilinx and this one from intel will tell you more about this stuff.

I have written lots of comments in the code to better explain it -

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 120 121 122 123 124 125 126 127 128 129 130 131 132 133 134 135 136 137 138 139 140 141 142 143 144 145 146 147 148 149 150 151 152 153 154 155 156 157 158 159 | |

As you can notice in the above code, we use the MAC units and shift registers to lay out the planned architecture. While the shift register is a straight forward shift-register module wherein you can supply the data width and depth of the shift register as parameters, the mac_manual module is also quite simple to implement, at least when we are working with integers.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 | |

Verifying our design with a testbench

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 | |

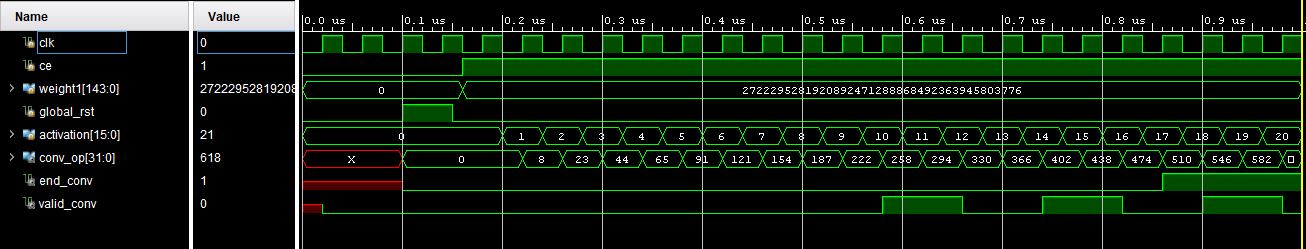

Running the above test bench gives us the following result in Vivado:

The values corresponding to the state when the valid_conv signal is high are the convolution outputs. Its quite clear that these outputs match the ones the python script gave us for the same input.

Here is a link to the exact git commit at this point. i.e before adding the Fixed-point arithmetic support to this project.

Fixed-point Arithmetic

You might wanna read this post, further in this series and come back to understand the changes we're about to make.

The advantage of a fully modular device like ours is that we only have to change the arithmetic (addition and multiplication) modules in order to get the whole thing working with fixed point numbers. Nothing else needs to be changed as the concept of that binary point is only in our heads. The hardware still only sees regular integers (bit-fields).

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 | |

We just need to change the convolver.v module to call mac_manual_fp instead of mac_manual. To understand how the qmult and qadd arithmetic modules work, you might wanna go through this post and come back.

This design unit was implemented on an FPGA as a part of a complete Processor + FPGA system to measure the actual acceleration it provides. You can read about it in Part Seven - System integration of the Convolution IP.

All the design files along with their test benches can be found at the Github Repo

PREVIOUS:The architecture outline

NEXT :The activation function