Adding Fixed point arithmetic to your design

In this article we convert normal additions and multiplications in our design to fixed point representations. This will enable use to work with fractional numbers.

This article is a part of a series where we implement an FPGA based Convolutional Neural Network accelerator. However, the content of this article is useful for understanding the 'Fixed-point' number representation and using it in any hardware design. You can understand the general principle and use the code even if you aren't interested in the entire series of articles.

Part One - The Architecture Outline

Part Two - The Convolution Engine

Part Three - The Activation Function

Part Five - Adding fixed-point arithmetic to your design

Part Six - Putting it all together: The CNN Accelerator

Part Seven - System integration of the Convolution IP

Part Eight - Creating a multi-layer neural network in hardware.

Why do we need a number representation system?

To be able to apply our hardware on real life inputs and data, we need it to be able to interpret the various formats humans use to interpret this data. Real world data has everything from sign (positive or negative) to fractional values and integral values. However, when we work with hardware, all we see is registers and wires which are simply variables capable of holding a sequence of bits. All we get to choose is the length of this sequence. There is simply no other parameter involved in defining these variables. As the author of this excellently written whitepaper on fixed point arithmetic puts it

"The salient point is that there is no meaning inherent in a binary word, although most people are tempted to think of them (at first glance, anyway) as positive integers. However, the meaning of an N-bit binary word depends entirely on its interpretation, i.e., on the representation set and the mapping we choose to use."

The terms Fixed-point and Floating-point are simply that, they're representation sets and mapping schemes that have been widely used and standardized by the entire community. You could totally choose to come up with your own new representation and use it to interpret these binary words.

There's probably enough material out in the open that can teach you about the basics of representing numbers on computers and the differences between fixed-point and floating-point numbers.

The hardware itself has no concept of fixed-point of floating-point numbers. It just recognizes fields with certain bit widths. This means that we need a layer above the hardware to help us generate numbers in the chosen format with chosen precision and at the same time help us interpret the results that the hardware is giving us by converting the raw binary words into the chosen format.

We implement this upper layer using python mainly because of its simplicity and code readability.

The Format

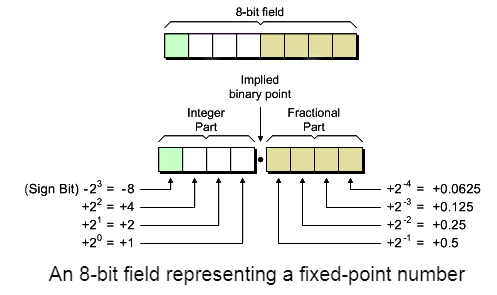

Fixed-point numbers are those in which the position of the decimal point remains fixed independent of the value that number is representing. This is what makes fixed point numbers easier to understand as well as implement in hardware in comparison to the floating point numbers. Fixed point arithmetic also uses much less resources in comparison to floating point arithmetic. Of course all of this comes at a tradeoff. Floating point arithmetic can provide higher levels of precision for a particular bit-width than fixed-point arithmetic. We take a hit in terms of the quantization noise when we use numbers in which the binary point in a fixed position despite the magnitude being represented by that number.

We shall be using a Signed 2's complement fixed-point representation wherein, as show in in the above image, the first bit represents the sign bit and if the number is negative (sign bit = 1) it is assumed that it is in a 2's complement form. We need to be mindful of this when dealing with negative numbers.

There are several notations to properly denote the various parameters of a fixed point number. The most popular one is probably the A(a,b) format wherein a is the number of bits used to represent the integer portion of the number and b is the number of bits used to represent the fractional portion of the number. Which means that the total number of bits used to store an A(a,b) fixed point number is N = a + b + 1.

The values of a,b are to be selected based on the range and precision that you need for the problem at hand. For example, if all or most of the numbers you're trying to represent have a very small magnitude, you might forgo range for more precision i.e you can use less bits to represent the integral portion and use more bits to represent the fractional portion thus achieving greater precision in your arithmetic. This is a decision that you need to make on the basis of the exact application where your hardware will be used.

If you need a further detailing on fixed-point numbers, go through this document.

The Golden Model

Before we move on to implement the fixed point arithmetic in Verilog, let's create a golden model i.e a model of the target functionality that gives use the correct target outputs which we can use to check the results from the actual Verilog implementation and fix any bugs based on the wrong outputs.

We define a few functions that allow us to convert numbers between the standard floating-point representation that python uses and the fixed point representation that we want our hardware to use and vice-versa.

Below is the code for a function that converts a number in the standard float format to a user specified format. The variable integer_precision represents the number of bits to be used to represent the integer part (before the decimal point) of the fixed point number and the variable fraction_precision denotes the number of bits used for the fractional part (after the decimal point).

NOTE: When I mention a float variable hereon after, it represents the 'float' data type in python. Apparently, it is stored in the form of a 32-bit floating point number by the CPU under the hood

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 | |

the 'twos_comp' function being used by this code is another function that we use to generate the 2's compliment of any binary number. Here is the code:

1 2 3 4 5 | |

Let's run these function and see what we've built:

1 2 3 4 5 6 7 8 9 | |

And finally a function to convert data from our fixed-point representation to a human readable float variable:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 | |

Let's see how well this function is working:

1 2 3 4 | |

Finally, just for our heart's satisfaction, let's see what happens when we nest these functions together!

1 2 | |

WHOA! What just happened here? How come the value is different when converted back and forth between fixed-point and float representation?

That my friends is the lack of precision of the fixed-point representation in action. When we store a float variable in python, the CPU actually stores it in a 32-bit floating point number in its memory, when we change that to a 16 bit fixed point representation, we loose a teeny tiny bit of precision as the fixed-point representation does not have enough bits to achieve the same precision as the 32-bit floating point variables.

Designing hardware for fixed-point arithmetic

Now that we have a solid Golden model ready, let's begin coding in Verilog!

The following code snippets are modified versions of the fixed-point arithmetic library from Opencores.

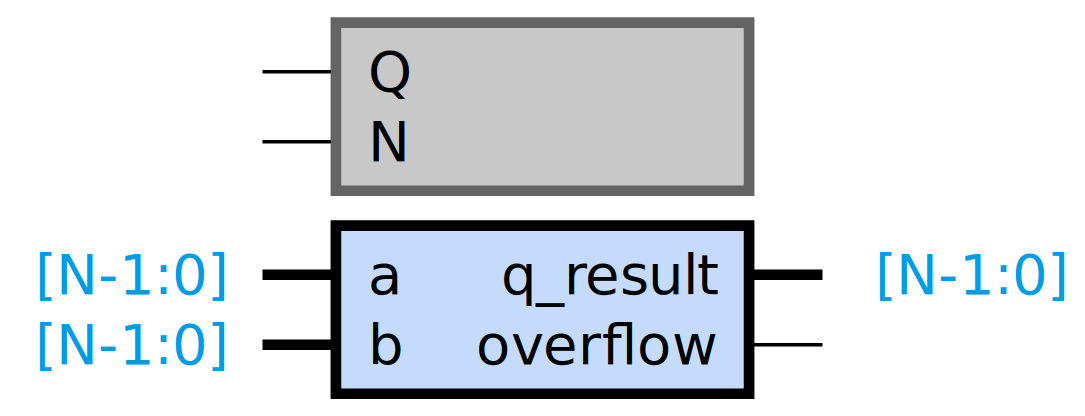

Fixed-point Addition

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 | |

Fixed-point Multiplication

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 | |

Wait a second, it takes 2*N bits to hold the result of the multiplication between two N - bit numbers right? Right.

Then Why is our outpur the same width as the multiploer and multiplicand?

This is because of the quantization that needs to be done at some point in the process in order to preserve the width of our data-path. If we keep increasing the size of the output at every multiplication that we encounter, we will end up with an insanely large datapath. This quantization adds something called the quantization-noise to our data since we're truncating the 2*N bit output to N bits. We should keep it in mind not to overflow and loose too much information in the truncation process. This is true most of the time if the numbers are pretty small and within the dynamic range of the fixed-point representation.

All the design files along with their test benches can be found at the Github Repo